Antenna Tuner IC

Audio Amplifier

Bluetooth Audio SoC

Charging IC

Clock IC

CODEC

Envelope Tracker

IoT Chip

Low Noise Amplifier (LNA)

Mobile Station Modem

Modem Chip

Network RF Chip

Networking Chip

PHY Chip

PMIC

Power Amplifier

Power Tracker

RF IC

System on Chip (SoC)

WIFI Chip

Wireless Communication Module

Wireless Network Chip

MediaTek

MT8167A/D

MediaTek

Learn More

MT7981AA

MediaTek

Learn More

MT7976DAN

MediaTek

Learn More

MT7931AN

MediaTek

Learn More

MT7688A

MediaTek

Learn More

MT7668BU

MediaTek

Learn More

MT7628A

MediaTek

Learn More

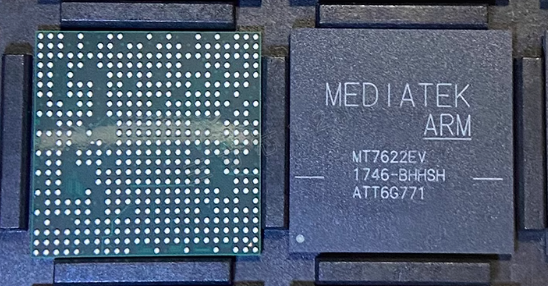

MT7622EV

MediaTek

Learn More

MT7615D

MediaTek

Learn More

MT7602E

MediaTek

Learn More

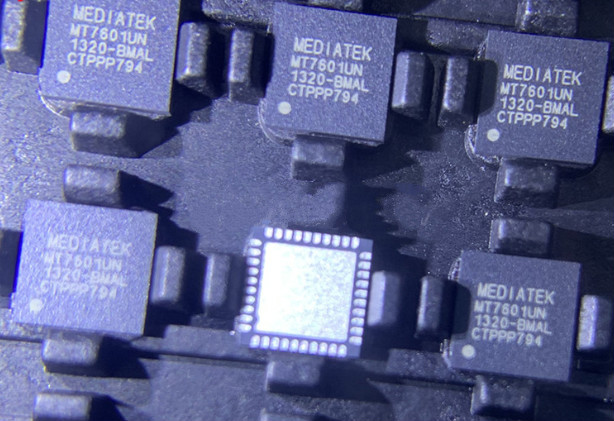

MT7601UN/A-L

MediaTek

Learn More

MT7530W

MediaTek

Learn More