Micron formally announced its 12-Hi HBM3E memory stacks on Monday. The new products feature a 36 GB capacity and are aimed at leading-edge processors for AI and HPC workloads, such as Nvidia's H200 and B100/B200 GPUs.

Micron's 12-Hi HBM3E memory stacks boast a 36GB capacity, 50% more than the previous 8-Hi versions, which had 24 GB. This increased capacity allows Data Centers to run larger AI models, such as Llama 2, with up to 70 billion parameters on a single processor. This capability eliminates the need for frequent CPU offloading and reduces delays in communication between GPUs, speeding up data processing.

In terms of performance, Micron's 12-Hi HBM3E stacks deliver over 1.2 TB/s of memory bandwidth with data transfer rates exceeding 9.2 Gb/s. According to the company, despite offering 50% higher memory capacity than competitors, Micron's HBM3E consumes less power than 8-Hi HBM3E stacks.

Micron's HBM3E 12-high includes a fully programmable memory built-in self-test (MBIST) system to ensure faster time-to-market and reliability for its customers. This technology can simulate system-level traffic at full speed, allowing for thorough testing and quicker validation of new systems.

Micron's HBM3E memory devices are compatible with TSMC's chip-on-wafer-on-substrate (CoWoS) packaging technology, widely used for packaging AI processors such as Nvidia's H100 and H200.

"TSMC and Micron have enjoyed a long-term strategic partnership,” said Dan Kochpatcharin, head of the Ecosystem and Alliance Management Division at TSMC. "As part of the OIP ecosystem, we have worked closely to enable Micron's HBM3E-based system and chip-on-wafer-on-substrate (CoWoS) packaging design to support our customer's AI innovation.”

Micron is shipping production-ready 12-Hi HBM3E units to key industry partners for qualification testing throughout the AI ecosystem.

Other

Qualcomm Announces Snapdragon 7s Gen 3 For Mid-Range Devices With AI Capabilities

2024.08.21

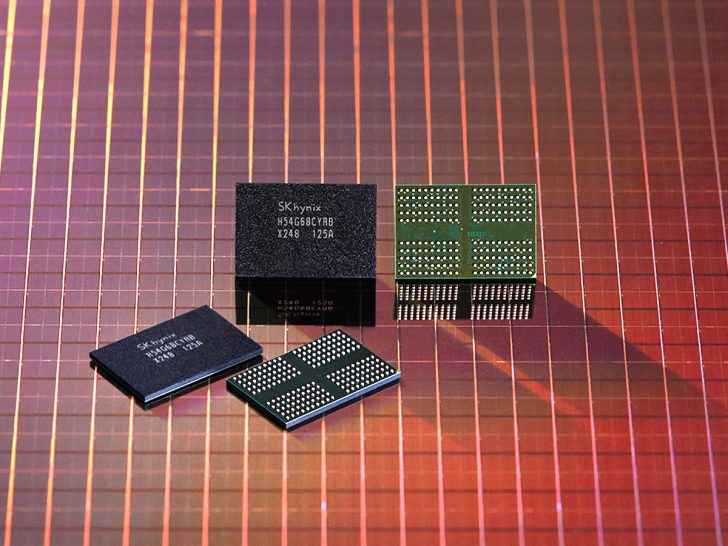

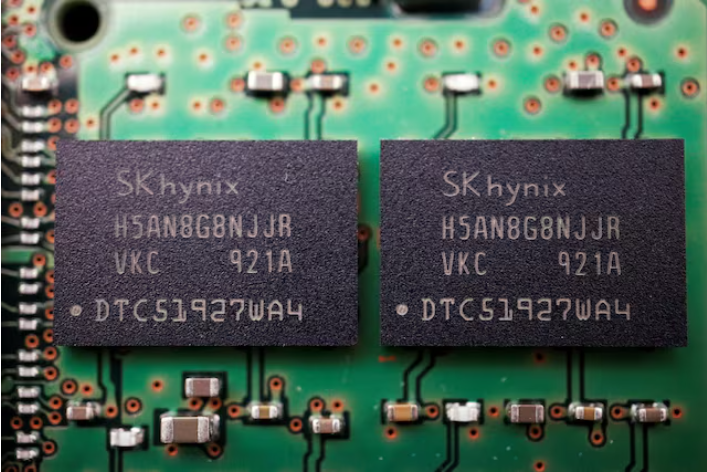

SK Hynix Preps Large-Scale DRAM Price Hike, DDR5 Up To 20% Expensive

2024.08.22

Qualcomm, Motorola, Rohde & Schwarz show 5G Broadcast innovation

2024.08.22

SK Hynix Is Developing Next-Gen HBM With 30x Performance Uplift

2024.08.23

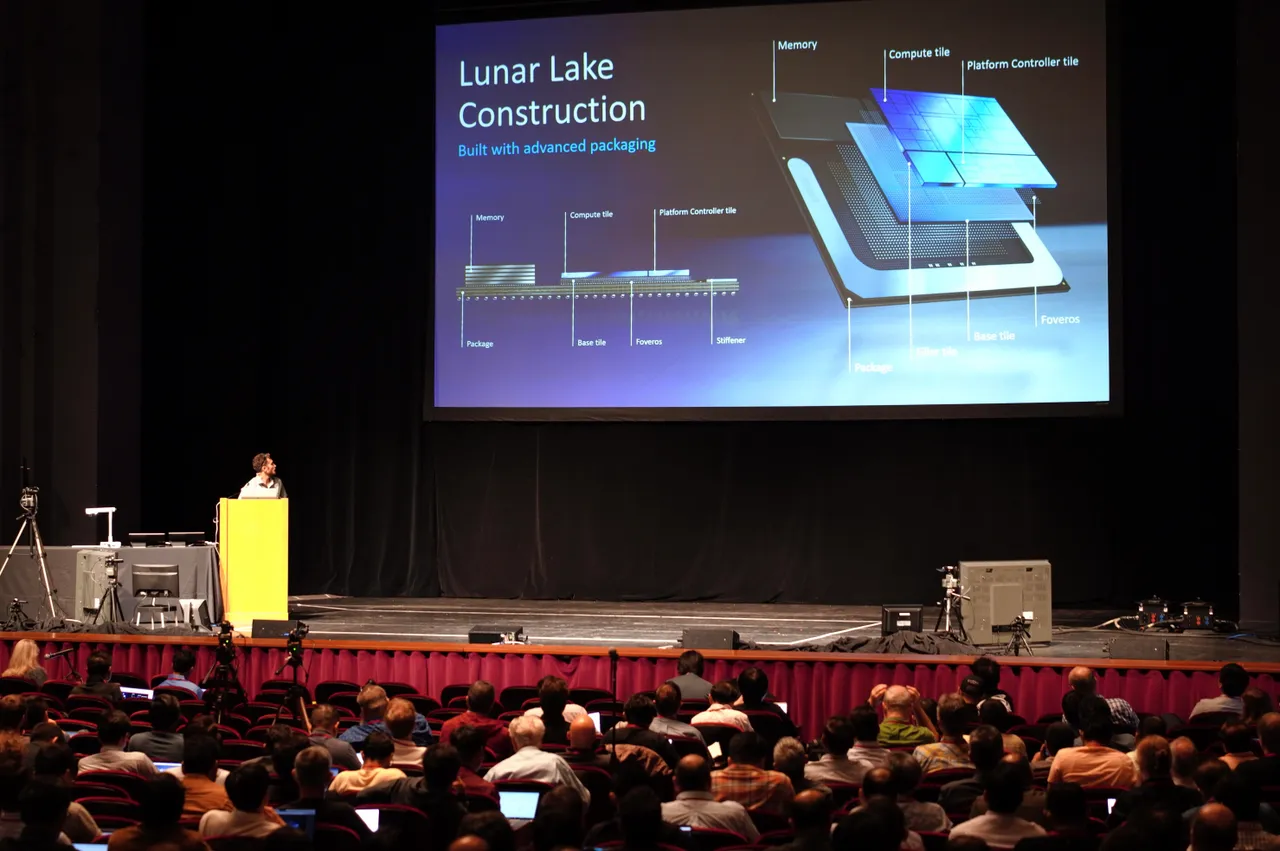

OpenAI, Intel, and Qualcomm talk AI compute at legendary Hot Chips conference

2024.08.27

Samsung Completes NVIDIA's Quality Test for HBM3E Memory, Begins Shipments

2024.09.04

TSMC supplier says AI chip market growth to accelerate, dismisses Nvidia wipeout

2024.09.05

SK Hynix to start mass producing HBM3E 12-layer chips this month

2024.09.05