At its Snapdragon Summit in Hawaii last week, I tried Qualcomm-made smart glasses with a fighter pilot-meets-cyberpunk-chic design, created to answer questions about whatever I looked at. Though not flawless, the glasses showcased Qualcomm's vision for a world where its chips power smart glasses with augmented and mixed reality, reshaping how we engage with our surroundings.

If last year's Snapdragon Summit was about introducing on-device generative AI, Qualcomm spent this year predicting the rise of so-called Agentic AI, which answers queries by accessing information through many sources of user information including all their apps. While smartphones will run large language models and on-device AI processing, it will be fed data by wearables and smart glasses for even more precise results. Or at least that's how Qualcomm envisions the future playing out.

The combination of smart glasses and more responsive AI should enable a future in which we're commanding our world instead of tapping through apps. That's according to Alex Katouzian, Qualcomm's senior vice president and general manager of mobile, compute and XR, who spoke with me in Hawaii.

"Apps will start to mean less and less," Katouzian said. "The apps are not going away, but as your interface to the device, as far as you're concerned, they should go away."

This projection applies far into the future, not next year. But AI agents are expected to work off of all the data fed into them by the growing network of devices people wear. While more consumers are wearing smartwatches, there are only so many ways to engage with them. Katouzian didn't go so far as to say that smartwatches are plateauing, but their potential is more limited than glasses. There are only a couple more watch features that make sense to add right now, like measuring blood pressure or glucose.

In comparison, smart glasses are expected to change how users interact with the world. According to Katouzian, the Ray-Ban Meta smart glasses, which debuted last year with Qualcomm's AR1 Gen 1 chip and recently gained additional AI features, have sold over 10 times their initial forecast. "Meta is really pushing that market forward," he said. "And the use of AI in those glasses really made it much more popular."

After Google Glass failed to gain consumer traction about a decade ago, and with devices like Microsoft's Magic Leap targeting enterprise use, no other smart glasses have gained momentum quite like the Ray-Ban Meta, which dial down tech expectations to offer a simpler feature set. With them, you can ask AI questions via a voice assistant, make calls, take photos, shoot videos and play music.

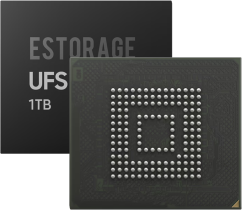

For Qualcomm, Meta's smart shades open the door to more complex augmented reality (AR) and mixed reality (XR) devices. Qualcomm has been expanding its Snapdragon Spaces platform to refine the software tools it offers developers to use in their mixed-reality devices.

"There are new ways of interfacing with an XR device that you never do on the phone or laptop or anything," Katouzian said. "You look with your eyes to choose. Your head tracking is definitely a must. Your hand tracking is definitely a must."

Katouzian says the developer community using Snapdragon Spaces SDKs has grown to over 6,000 people, and that usage data is fed back to Qualcomm to refine its algorithms. Then, the company can take those solutions to a potential operating system partner, offering the platform as access to developers.

A small sampling of Qualcomm's XR future

At the Snapdragon Summit, Qualcomm set up several demonstrations to showcase different AR applications and devices. Some of these involved existing devices, like a Meta Quest 3 headset showing off the company's new automotive chips, while Snap had an area letting attendees try out its not-yet-released Spectacles AR glasses, (which run unnamed Qualcomm processors). Both support hand tracking, but I was more impressed by the Spectacles' accurate tracking, which enabled an array of fun games and experiences.

I also got a peek at Qualcomm's AR future through a reference device -- smart glasses that looked like a cross between Snap Spectacles and aviator sunglasses. (Sadly, Hawaii was too warm for my bomber jacket.) This demo was meant to test AI interactions on the glasses that are relayed through a nearby reference phone with a Snapdragon 8 Elite chip running on-device AI. Ultimately, it's supposed to be an early look at how users can ask questions about what they're looking at and get answers on the spot. It's an approach Google is tinkering with for its AI-powered Project Astra tech for smart glasses.

The experience wasn't great. In the game room of the hotel where the summit took place, I repeatedly asked about an object in my line of sight (a foosball table). After a couple failures, the glasses recognized it as a soccer table and gave me rules for that sport. But then I asked follow-up questions, like "what are famous soccer movies," and the glasses gave me a lengthy and detailed response, listing off five films I'd never heard of. Apart from that, they were comfortable to wear, and though their bug-eyed aviator style might be a bit off-putting, they felt more wearable than many other, bulkier smart glasses.

Other

Qualcomm Announces Snapdragon 7s Gen 3 For Mid-Range Devices With AI Capabilities

2024.08.21

SK Hynix Preps Large-Scale DRAM Price Hike, DDR5 Up To 20% Expensive

2024.08.22

Qualcomm, Motorola, Rohde & Schwarz show 5G Broadcast innovation

2024.08.22

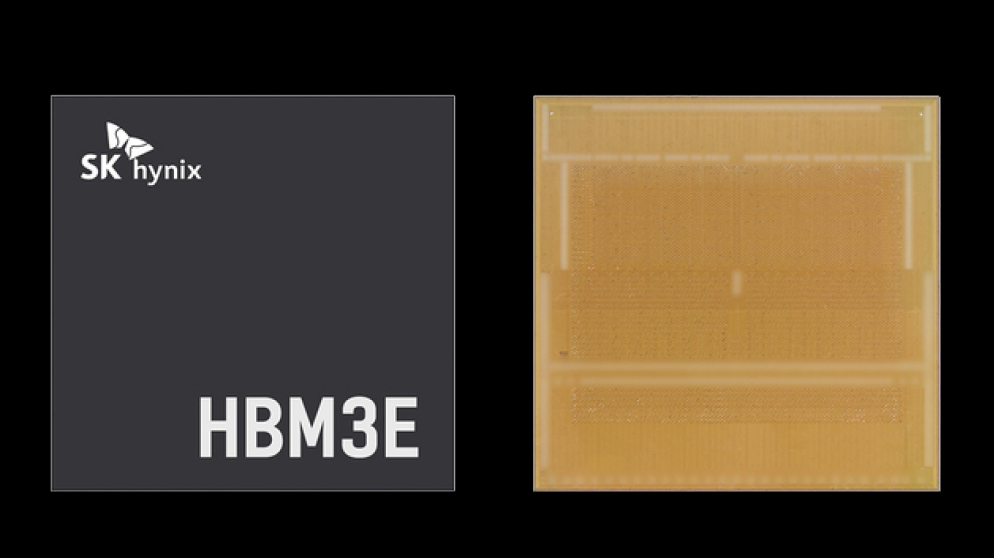

SK Hynix Is Developing Next-Gen HBM With 30x Performance Uplift

2024.08.23

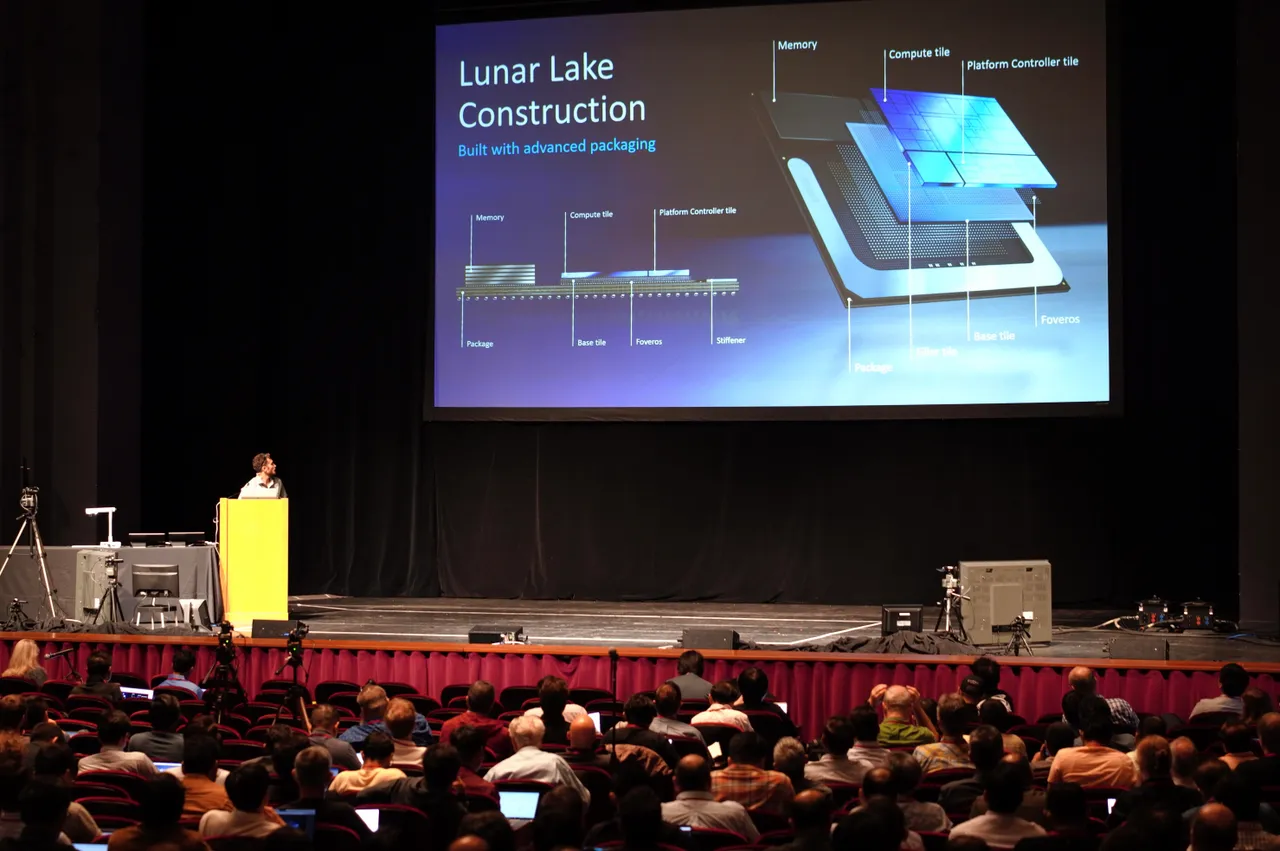

OpenAI, Intel, and Qualcomm talk AI compute at legendary Hot Chips conference

2024.08.27

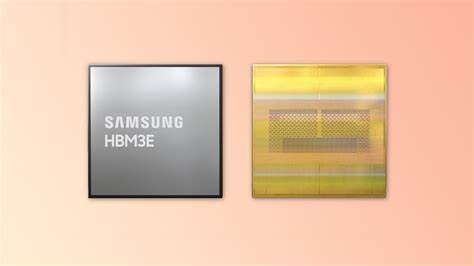

Samsung Completes NVIDIA's Quality Test for HBM3E Memory, Begins Shipments

2024.09.04

TSMC supplier says AI chip market growth to accelerate, dismisses Nvidia wipeout

2024.09.05

SK Hynix to start mass producing HBM3E 12-layer chips this month

2024.09.05